Lawrence Lessig über Code und Gesetz (Code): Unterschied zwischen den Versionen

Anna (Diskussion | Beiträge) K (→Bytes that sniff (S. 140f): paragraph) |

Anna (Diskussion | Beiträge) (→Machines that count (S. 141f): cut) |

||

| Zeile 146: | Zeile 146: | ||

The only kind of code that can do that is “open code.” And the small point I want to insist upon just now is that where transparency of government action matters, so too should the kind of code it uses. This is not the claim that all government code should be public. I believe there are legitimate areas within which the government can act secretly. More particularly, where transparency would interfere with the function itself, then there’s a good argument against transparency. But there were very limited ways in which a possible criminal suspect could more effectively evade the surveillance of Carnivore just because its code was open. And thus, again, open code should, in my view, have been the norm. | The only kind of code that can do that is “open code.” And the small point I want to insist upon just now is that where transparency of government action matters, so too should the kind of code it uses. This is not the claim that all government code should be public. I believe there are legitimate areas within which the government can act secretly. More particularly, where transparency would interfere with the function itself, then there’s a good argument against transparency. But there were very limited ways in which a possible criminal suspect could more effectively evade the surveillance of Carnivore just because its code was open. And thus, again, open code should, in my view, have been the norm. | ||

| − | |||

| − | |||

=== Code on the Net (S. 143ff) === | === Code on the Net (S. 143ff) === | ||

Version vom 30. Mai 2008, 07:23 Uhr

Alle Exzerpte aus Codev2 von Lawrence Lessig

Inhaltsverzeichnis

Borders (S. 10f)

It was a very ordinary dispute, this argument between Martha Jones and her neighbors. It was the sort of dispute that people have had since the start of neighborhoods. It didn’t begin in anger. It began with a misunderstanding. In this world, misunderstandings like this are far too common. Martha thought about that as she wondered whether she should stay; there were other places she could go. Leaving would mean abandoning what she had built, but frustrations like this were beginning to get to her. Maybe, she thought, it was time to move on.

The argument was about borders — about where her land stopped. It seemed like a simple idea, one you would have thought the powers-that-be would have worked out many years before. But here they were, her neighbor Dank and she, still fighting about borders. Or rather, about something fuzzy at the borders — about something of Martha’s that spilled over into the land of others. This was the fight, and it all related to what Martha did.

Martha grew flowers. Not just any flowers, but flowers with an odd sort of power. They were beautiful flowers, and their scent entranced. But, however beautiful, these flowers were also poisonous. This was Martha’s weird idea: to make flowers of extraordinary beauty which, if touched, would kill. Strange no doubt, but no one said that Martha wasn’t strange. She was unusual, as was this neighborhood. But sadly, disputes like this were not.

The start of the argument was predictable enough. Martha’s neighbor, Dank, had a dog. Dank’s dog died. The dog died because it had eaten a petal from one of Martha’s flowers. A beautiful petal, and now a dead dog. Dank had his own ideas about these flowers, and about this neighbor, and he expressed those ideas — perhaps with a bit too much anger, or perhaps with anger appropriate to the situation.

“There is no reason to grow deadly flowers,” Dank yelled across the fence. “There’s no reason to get so upset about a few dead dogs,” Martha replied.“A dog can always be replaced. And anyway, why have a dog that suffers when dying? Get yourself a pain-free-death dog, and my petals will cause no harm.”

I came into the argument at about this time. I was walking by, in the way one walks in this space. (At first I had teleported to get near, but we needn’t complicate the story with jargon. Let’s just say I was walking.) I saw the two neighbors becoming increasingly angry with each other. I had heard about the disputed flowers — about how their petals carried poison. It seemed to me a simple problem to solve, but I guess it’s simple only if you understand how problems like this are created.

Dank and Martha were angry because in a sense they were stuck. Both had built a life in the neighborhood; they had invested many hours there. But both were coming to understand its limits. This is a common condition: We all build our lives in places with limits. We are all disappointed at times. What was different about Dank and Martha?

One difference was the nature of the space, or context, where their argument was happening. This was not “real space” but virtual space. It was part of what I call “cyberspace.” The environment was a “massively multiple online game” (“MMOG”), and MMOG space is quite different from the space we call real.

Real space is the place where you are right now: your office, your den, maybe by a pool. It’s a world defined by both laws that are man-made and others that are not. “Limited liability” for corporations is a man-made law. It means that the directors of a corporation (usually) cannot be held personally liable for the sins of the company. Limited life for humans is not a man-made law: That we all will die is not the result of a decision that Congress made. In real space, our lives are subject to both sorts of law, though in principle we could change one sort.

But there are other sorts of laws in real space as well. You bought this book, I trust, or you borrowed it from someone who did. If you stole it, you are a thief, whether you are caught or not. Our language is a norm; norms are collectively determined. As our norms have been determined, your “stealing” makes you a thief, and not just because you took it. There are plenty of ways to take something but not be thought of as a thief. If you came across a dollar blowing in the wind, taking the money will not make you a thief; indeed, not taking the money makes you a chump. But stealing this book from the bookstore (even when there are so many left for others) marks you as a thief. Social norms make it so, and we live life subject to these norms.

Some of these norms can be changed collectively, if not individually. I can choose to burn my draft card, but I cannot choose whether doing so will make me a hero or a traitor. I can refuse an invitation to lunch, but I cannot choose whether doing so will make me rude. I have choices in real life, but escaping the consequences of the choices I make is not one of them. Norms in this sense constrain us in ways that are so familiar as to be all but invisible.

MMOG space is different. It is, first of all, a virtual space—like a cartoon on a television screen, sometimes rendered to look three-dimensional. But unlike a cartoon, MMOG space enables you to control the characters on the screen in real time. At least, you control your character — one among many characters controlled by many others in this space. One builds the world one will inhabit here.

Regulation by Code (S.24f)

The story about Martha and Dank is a clue to answering this question about regulability. If in MMOG space we can change the laws of nature — make possible what before was impossible, or make impossible what before was possible — why can’t we change regulability in cyberspace? Why can’t we imagine an Internet or a cyberspace where behavior can be controlled because code now enables that control?

For this, importantly, is just what MMOG space is. MMOG space is “regulated,” though the regulation is special. In MMOG space regulation comes through code. Important rules are imposed, not through social sanctions, and not by the state, but by the very architecture of the particular space. A rule is defined, not through a statute, but through the code that governs the space.

This is the second theme of this book: There is regulation of behavior on the Internet and in cyberspace, but that regulation is imposed primarily through code. The differences in the regulations effected through code distinguish different parts of the Internet and cyberspace. In some places, life is fairly free; in other places, it is more controlled. And the difference between these spaces is simply a difference in the architectures of control — that is, a difference in code.

If we combine the first two themes, then, we come to a central argument of the book: The regulability described in the first theme depends on the code described in the second. Some architectures of cyberspace are more regulable than others; some architectures enable better control than others. Therefore, whether a part of cyberspace — or the Internet generally — can be regulated turns on the nature of its code. Its architecture will affect whether behavior can be controlled. To follow Mitch Kapor, its architecture is its politics.

And from this a further point follows: If some architectures are more regulable than others — if some give governments more control than others — then governments will favor some architectures more than others. Favor, in turn, can translate into action, either by governments, or for governments. Either way, the architectures that render space less regulable can themselves be changed to make the space more regulable. (By whom, and why, is a matter we take up later.) This fact about regulability is a threat to those who worry about governmental power; it is a reality for those who depend upon governmental power. Some designs enable government more than others; some designs enable government differently; some designs should be chosen over others, depending upon the values at stake.

Identity and authentication (S. 43ff)

Identity and authentication in cyberspace and real space are in theory the same. In practice they are quite different. To see that difference, however, we need to see more about the technical detail of how the Net is built.

As I’ve already said, the Internet is built from a suite of protocols referred to collectively as “TCP/IP.” At its core, the TCP/IP suite includes protocols for exchanging packets of data between two machines “on” the Net. Brutally simplified, the system takes a bunch of data (a file, for example), chops it up into packets, and slaps on the address to which the packet is to be sent and the address from which it is sent. The addresses are called Internet Protocol addresses, and they look like this: 128.34.35.204. Once properly addressed, the packets are then sent across the Internet to their intended destination. Machines along the way (“routers”) look at the address to which the packet is sent, and depending upon an (increasingly complicated) algorithm, the machines decide to which machine the packet should be sent next. A packet could make many “hops” between its start and its end. But as the network becomes faster and more robust, those many hops seem almost instantaneous.

In the terms I’ve described, there are many attributes that might be associated with any packet of data sent across the network. For example, the packet might come from an e-mail written by Al Gore. That means the e-mail is written by a former vice president of the United States, by a man knowledgeable about global warming, by a man over the age of 50, by a tall man, by an American citizen, by a former member of the United States Senate, and so on. Imagine also that the e-mail was written while Al Gore was in Germany, and that it is about negotiations for climate control. The identity of that packet of information might be said to include all these attributes.

But the e-mail itself authenticates none of these facts. The e-mail may say it’s from Al Gore, but the TCP/IP protocol alone gives us no way to be sure. It may have been written while Gore was in Germany, but he could have sent it through a server in Washington. And of course, while the system eventually will figure out that the packet is part of an e-mail, the information traveling across TCP/IP itself does not contain anything that would indicate what the content was. The protocol thus doesn’t authenticate who sent the packet, where they sent it from, and what the packet is. All it purports to assert is an IP address to which the packet is to be sent, and an IP address from which the packet comes. From the perspective of the network, this other information is unnecessary surplus. Like a daydreaming postal worker, the network simply moves the data and leaves its interpretation to the applications at either end.

This minimalism in the Internet’s design was not an accident. It reflects a decision about how best to design a network to perform a wide range over very different functions. Rather than build into this network a complex set of functionality thought to be needed by every single application, this network philosophy pushes complexity to the edge of the network — to the applications that run on the network, rather than the network’s core. The core is kept as simple as possible. Thus if authentication about who is using the network is necessary, that functionality should be performed by an application connected to the network, not by the network itself. Or if content needs to be encrypted, that functionality should be performed by an application connected to the network, not by the network itself.

This design principle was named by network architects Jerome Saltzer, David Clark, and David Reed as the end-to-end principle. It has been a core principle of the Internet’s architecture, and, in my view, one of the most important reasons that the Internet produced the innovation and growth that it has enjoyed. But its consequences for purposes of identification and authentication make both extremely difficult with the basic protocols of the Internet alone. It is as if you were in a carnival funhouse with the lights dimmed to darkness and voices coming from around you, but from people you do not know and from places you cannot identify. The system knows that there are entities out there interacting with it, but it knows nothing about who those entities are. While in real space — and here is the important point — anonymity has to be created, in cyberspace anonymity is the given.

This difference in the architectures of real space and cyberspace makes a big difference in the regulability of behavior in each. The absence of relatively selfauthenticating facts in cyberspace makes it extremely difficult to regulate behavior there. If we could all walk around as “The Invisible Man” in real space, the same would be true about real space as well. That we’re not capable of becoming invisible in real space (or at least not easily) is an important reason that regulation can work.

Thus, for example, if a state wants to control children’s access to “indecent” speech on the Internet, the original Internet architecture provides little help. The state can say to websites, “don’t let kids see porn.” But the website operators can’t know — from the data provided by the TCP/IP protocols at least — whether the entity accessing its web page is a kid or an adult. That’s different, again, from real space. If a kid walks into a porn shop wearing a mustache and stilts, his effort to conceal is likely to fail. The attribute “being a kid” is asserted in real space, even if efforts to conceal it are possible. But in cyberspace, there’s no need to conceal, because the facts you might want to conceal about your identity (i.e., that you’re a kid) are not asserted anyway.

All this is true, at least, under the basic Internet architecture. But as the last ten years have made clear, none of this is true by necessity. To the extent that the lack of efficient technologies for authenticating facts about individuals makes it harder to regulate behavior, there are architectures that could be layered onto the TCP/IP protocol to create efficient authentication. We’re far enough into the history of the Internet to see what these technologies could look like. We’re far enough into this history to see that the trend toward this authentication is unstoppable. The only question is whether we will build into this system of authentication the kinds of protections for privacy and autonomy that are needed.

A dot's life (S. 121ff)

There are many ways to think about “regulation.” I want to think about it from the perspective of someone who is regulated, or, what is different, constrained. That someone regulated is represented by this (pathetic) dot — a creature (you or me) subject to different regulations that might have the effect of constraining (or as we’ll see, enabling) the dot’s behavior. By describing the various constraints that might bear on this individual, I hope to show you something about how these constraints function together. Here then is the dot.

How is this dot “regulated”?

Let’s start with something easy: smoking. If you want to smoke, what constraints do you face? What factors regulate your decision to smoke or not?

One constraint is legal. In some places at least, laws regulate smoking — if you are under eighteen, the law says that cigarettes cannot be sold to you. If you are under twenty-six, cigarettes cannot be sold to you unless the seller checks your ID. Laws also regulate where smoking is permitted — not in O’Hare Airport, on an airplane, or in an elevator, for instance. In these two ways at least, laws aim to direct smoking behavior. They operate as a kind of constraint on an individual who wants to smoke.

But laws are not the most significant constraints on smoking. Smokers in the United States certainly feel their freedom regulated, even if only rarely by the law. There are no smoking police, and smoking courts are still quite rare. Rather, smokers in America are regulated by norms. Norms say that one doesn’t light a cigarette in a private car without first asking permission of the other passengers. They also say, however, that one needn’t ask permission to smoke at a picnic. Norms say that others can ask you to stop smoking at a restaurant, or that you never smoke during a meal. These norms effect a certain constraint, and this constraint regulates smoking behavior.

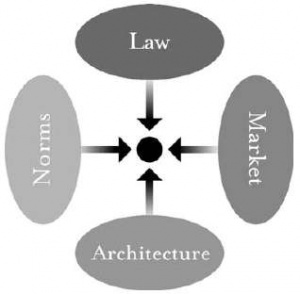

Laws and norms are still not the only forces regulating smoking behavior. The market is also a constraint. The price of cigarettes is a constraint on your ability to smoke—change the price, and you change this constraint. Likewise with quality. If the market supplies a variety of cigarettes of widely varying quality and price, your ability to select the kind of cigarette you want increases; increasing choice here reduces constraint. what things regulate

Finally, there are the constraints created by the technology of cigarettes, or by the technologies affecting their supply. Nicotine-treated cigarettes are addictive and therefore create a greater constraint on smoking than untreated cigarettes. Smokeless cigarettes present less of a constraint because they can be smoked in more places. Cigarettes with a strong odor present more of a constraint because they can be smoked in fewer places. How the cigarette is, how it is designed, how it is built—in a word, its architecture—affects the constraints faced by a smoker. Thus, four constraints regulate this pathetic dot — the law, social norms, the market, and architecture — and the “regulation” of this dot is the sum of these four constraints. Changes in any one will affect the regulation of the whole. Some constraints will support others; some may undermine others. Thus, “changes in technology [may] usher in changes in . . . norms,” and the other way around. A complete view, therefore, must consider these four modalities together. So think of the four together like this:

In this drawing, each oval represents one kind of constraint operating on our pathetic dot in the center. Each constraint imposes a different kind of cost on the dot for engaging in the relevant behavior—in this case, smoking. The cost from norms is different from the market cost, which is different from the cost from law and the cost from the (cancerous) architecture of cigarettes.

The constraints are distinct, yet they are plainly interdependent. Each can support or oppose the others. Technologies can undermine norms and laws; they can also support them. Some constraints make others possible; others make some impossible. Constraints work together, though they function differently and the effect of each is distinct. Norms constrain through the stigma that a community imposes; markets constrain through the price that they exact; architectures constrain through the physical burdens they impose; and law constrains through the punishment it threatens.

We can call each constraint a “regulator,” and we can think of each as a distinct modality of regulation. Each modality has a complex nature, and the interaction among these four is also hard to describe. I’ve worked through this complexity more completely in the appendix. But for now, it is enough to see that they are linked and that, in a sense, they combine to produce the regulation to which our pathetic dot is subject in any given area.

We can use the same model to describe the regulation of behavior in cyberspace.

Law regulates behavior in cyberspace. Copyright law, defamation law, and obscenity laws all continue to threaten ex post sanction for the violation of legal rights. How well law regulates, or how efficiently, is a different question: In some cases it does so more efficiently, in some cases less. But whether better or not, law continues to threaten a certain consequence if it is defied. Legislatures enact; prosecutors threaten; courts convict.

Norms also regulate behavior in cyberspace. Talk about Democratic politics in the alt.knitting newsgroup, and you open yourself to flaming; “spoof ” someone’s identity in a MUD, and you may find yourself “toaded”; talk too much in a discussion list, and you are likely to be placed on a common bozo filter. In each case, a set of understandings constrain behavior, again through the threat of ex post sanctions imposed by a community.

Markets regulate behavior in cyberspace. Pricing structures constrain access, and if they do not, busy signals do. (AOL learned this quite dramatically when it shifted from an hourly to a flat-rate pricing plan.) Areas of the Web are beginning to charge for access, as online services have for some time. Advertisers reward popular sites; online services drop low-population forums. These behaviors are all a function of market constraints and market opportunity. They are all, in this sense, regulations of the market.

Finally, an analog for architecture regulates behavior in cyberspace — code. The software and hardware that make cyberspace what it is constitute a set of constraints on how you can behave. The substance of these constraints may vary, but they are experienced as conditions on your access to cyberspace. In some places (online services such as AOL, for instance) you must enter a password before you gain access; in other places you can enter whether identified or not. In some places the transactions you engage in produce traces that link the transactions (the “mouse droppings”) back to you; in other places this link is achieved only if you want it to be. In some places you can choose to speak a language that only the recipient can hear (through encryption); in other places encryption is not an option. The code or software or architecture or protocols set these features, which are selected by code writers. They constrain some behavior by making other behavior possible or impossible. The code embeds certain values or makes certain values impossible. In this sense, it too is regulation, just as the architectures of real-space codes are regulations. As in real space, then, these four modalities regulate cyberspace. The same balance exists. As William Mitchell puts it (though he omits the constraint of the market):

Architecture, laws, and customs maintain and represent whatever balance has been struck in real space. As we construct and inhabit cyberspace communities, we will have to make and maintain similar bargains—though they will be embodied in software structures and electronic access controls rather than in architectural arrangements.

Laws, norms, the market, and architectures interact to build the environment that “Netizens” know. The code writer, as Ethan Katsh puts it, is the “architect.” But how can we “make and maintain” this balance between modalities? What tools do we have to achieve a different construction? How might the mix of real-space values be carried over to the world of cyberspace? How might the mix be changed if change is desired?

The limits in open code (S. 138f)

I’ve told a story about now regulation works, and about the increasing regulability of the Internet that we should expect. These are, as I described, changes in the architecture of the Net that will better enable government’s control by making behavior more easily monitored—or at least more traceable. These changes will emerge even if government does nothing. They are the by-product of changes made to enable e-commerce. But they will be cemented if (or when) the government recognizes just how it could make the network its tool.

That was Part I. In this part, I’ve focused upon a different regulability — the kind of regulation that is effected through the architectures of the space within which one lives. As I argued in Chapter 5, there’s nothing new about this modality of regulation: Governments have used architecture to regulate behavior forever. But what is new is its significance. As life moves onto the Net, more of life will be regulated through the self-conscious design of the space within which life happens. That’s not necessarily a bad thing. If there were a code-based way to stop drunk drivers, I’d be all for it. But neither is this pervasive code-based regulation benign. Due to the manner in which it functions, regulation by code can interfere with the ordinary democratic process by which we hold regulators accountable.

The key criticism that I’ve identified so far is transparency. Code-based regulation — especially of people who are not themselves technically expert — risks making regulation invisible. Controls are imposed for particular policy reasons, but people experience these controls as nature. And that experience, I suggested, could weaken democratic resolve.

Now that’s not saying much, at least about us. We are already a pretty apathetic political culture. And there’s nothing about cyberspace to suggest things are going to be different. Indeed, as Castranova observes about virtual worlds: “How strange, then, that one does not find much democracy at all in synthetic worlds. Not a trace, in fact. Not a hint of a shadow of a trace. It’s not there. The typical governance model in synthetic worlds consists of isolated moments of oppressive tyranny embedded in widespread anarchy.”

But if we could put aside our own skepticism about our democracy for a moment, and focus at least upon aspects of the Internet and cyberspace that we all agree matter fundamentally, then I think we will all recognize a point that, once recognized, seems obvious: If code regulates, then in at least some critical contexts, the kind of code that regulates is critically important.

By “kind” I mean to distinguish between two types of code: open and closed. By “open code” I mean code (both software and hardware) whose functionality is transparent at least to one knowledgeable about the technology. By “closed code,” I mean code (both software and hardware) whose functionality is opaque. One can guess what closed code is doing; and with enough opportunity to test, one might well reverse engineer it. But from the technology itself, there is no reasonable way to discern what the functionality of the technology is.

The terms “open” and “closed” code will suggest to many a critically important debate about how software should be developed. What most call the “open source software movement,” but which I, following Richard Stallman, call the “free software movement,” argues (in my view at least) that there are fundamental values of freedom that demand that software be developed as free software. The opposite of free software, in this sense, is proprietary software, where the developer hides the functionality of the software by distributing digital objects that are opaque about the underlying design.

I will describe this debate more in the balance of this chapter. But importantly, the point I am making about “open” versus “closed” code is distinct from the point about how code gets created. I personally have very strong views about how code should be created. But whatever side you are on in the “free vs. proprietary software” debate in general, in at least the contexts I will identify here, you should be able to agree with me first, that open code is a constraint on state power, and second, that in at least some cases, code must, in the relevant sense, be “open.” To set the stage for this argument, I want to describe two contexts in which I will argue that we all should agree that the kind of code deployed matters. The balance of the chapter then makes that argument.

Bytes that sniff (S. 140f)

In Chapter 2, I described technology that at the time was a bit of science fiction. In the five years since, that fiction has become even less fictional. In 1997, the government announced a project called Carnivore. Carnivore was to be a technology that sifted through e-mail traffic and collected just those emails written by or to a particular and named individual. The FBI intended to use this technology, pursuant to court orders, to gather evidence while investigating crimes.

In principle, there’s lots to praise in the ideals of the Carnivore design. The protocols required a judge to approve this surveillance. The technology was intended to collect data only about the target of the investigation. No one else was to be burdened by the tool. No one else was to have their privacy compromised.

But whether the technology did what it was said to do depends upon its code. And that code was closed. The contract the government let with the vendor that developed the Carnivore software did not require that the source for the software be made public. It instead permitted the vendor to keep the code secret.

Now it’s easy to understand why the vendor wanted its code kept secret. In general, inviting others to look at your code is much like inviting them to your house for dinner: There’s lots you need to do to make the place presentable. In this case in particular, the DOJ may have been concerned about security. But substantively, however, the vendor might want to use components of the software in other software projects. If the code is public, the vendor might lose some advantage from that transparency. These advantages for the vendor mean that it would be more costly for the government to insist upon a technology that was delivered with its source code revealed.

And so the question should be whether there’s something the government gains from having the source code revealed. And here’s the obvious point: As the government quickly learned as it tried to sell the idea of Carnivore, the fact that its code was secret was costly. Much of the government’s efforts were devoted to trying to build trust around its claim that Carnivore did just what it said it did. But the argument “I’m from the government, so trust me” doesn’t have much weight. And thus, the efforts of the government to deploy this technology — again, a valuable technology if it did what it said it did — were hampered.

I don’t know of any study that tries to evaluate the cost the government faced because of the skepticism about Carnivore versus the cost of developing Carnivore in an open way. I would be surprised if the government’s strategy made fiscal sense. But whether or not it was cheaper to develop closed rather than open code, it shouldn’t be controversial that the government has an independent obligation to make its procedures — at least in the context of ordinary criminal prosecution — transparent. I don’t mean that the investigator needs to reveal the things he thinks about when deciding which suspects to target. I mean instead the procedures for invading the privacy interests of ordinary citizens.

The only kind of code that can do that is “open code.” And the small point I want to insist upon just now is that where transparency of government action matters, so too should the kind of code it uses. This is not the claim that all government code should be public. I believe there are legitimate areas within which the government can act secretly. More particularly, where transparency would interfere with the function itself, then there’s a good argument against transparency. But there were very limited ways in which a possible criminal suspect could more effectively evade the surveillance of Carnivore just because its code was open. And thus, again, open code should, in my view, have been the norm.

Code on the Net (S. 143ff)

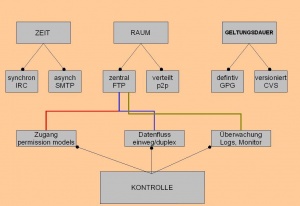

I’ve spent lots of time talking about “code.” It’s time to be a bit more specific about what “code” in the context of the Internet is, in what sense should we consider this code to be “open,” and in what contexts its openness will matter. As I’ve mentioned, the Internet is constructed by a set of protocols together referred to as TCP/IP. The TCP/IP suite includes a large number of protocols that feed different “layers” of the network. The standard model for describing layers of a network is the open systems interconnect (OSI) reference model. It describes seven network layers, each representing a “function performed when data is transferred between cooperating applications across” the network. But the TCP/IP suite is not as well articulated in that model. According to Craig Hunt, “most descriptions of TCP/IP define three to five functional levels in the protocol architecture.” In my view, it is simplest to describe four functional layers in a TCP/IP architecture.9 From the bottom of the stack up, we can call these the data link, network, transport, and application layers.10 Three layers constitute the essential plumbing of the Internet, hidden in the Net’s walls. (The faucets work at the next layer; be patient.) At the very bottom, just above the physical layer of the Internet, in the data link layer, very few protocols operate, since that handles local network interactions exclusively. More protocols exist at the next layer up, the network layer, where the IP protocol is dominant. It routes data between hosts and across network links, determining which path the data should take. At the next layer up, the transport layer, two different protocols dominate—TCP and UDP. These negotiate the flow of data between two network hosts. (The difference between the two is reliability—UDP offers no reliability guarantee.) The protocols together function as a kind of odd UPS. Data are passed from the application to the transport layer. There the data are placed in a (virtual) box and a (virtual) label is slapped on. That label ties the contents of the box to particular processes. (This is the work of the TCP or UDP protocols.) That box is then passed to the network layer, where the IP protocol puts the package into another package, with its own label. This label includes the origination and destination addresses. That box then can be further wrapped at the data link layer, depending on the specifics of the local network (whether, for example, it is an Ethernet network). The whole process is thus a bizarre packaging game: A new box is added at each layer, and a new label on each box describes the process at that layer. At the other end, the packaging process is reversed: Like a Russian doll, each package is opened at the proper layer, until at the end the machine recovers the initial application data. On top of these three layers is the application layer of the Internet. Here protocols “proliferate.”11 These include the most familiar network application protocols, such as FTP (file transfer protocol, a protocol for transferring files), SMTP (simple mail transport protocol, a protocol for transferring mail), and HTTP (hyper text transfer protocol, a protocol to publish and read hypertext documents across the Web). These are rules for how a client (your computer) will interact with a server (where the data are), or with another computer (in peer-to-peer services), and the other way around.12 These four layers of protocols are “the Internet.” Building on simple blocks, the system makes possible an extraordinary range of interaction. It is perhaps not quite as amazing as nature—think of DNA—but it is built on the same principle: keep the elements simple, and the compounds will astound. When I speak about regulating the code, I’m not talking about changing these core TCP/IP protocols. (Though in principle, of course, they could be regulated, and others have suggested that they should be.)13 In my view these components of the network are fixed. If you required them to be different, you’d break the Internet. Thus rather than imagining the government changing the core, the question I want to consider is how the government might either (1) complement the core with technology that adds regulability, or (2) regulates applications that connect to the core. Both will be important, but my focus is on the code that plugs into the Internet. I will call that code the “application space” of the Internet. This includes all the code that implements TCP/IP protocols at the application layer—browsers, operating systems, encryption modules, Java, e-mail systems, P2P, whatever elements you want. The question for the balance of this chapter is: What is the character of that code that makes it susceptible to regulation? A SHORT HISTORY OF CODE ON THE NET

In the beginning, of course, there were very few applications on the Net. The Net was no more than a protocol for exchanging data, and the original programs simply took advantage of this protocol. The file transfer protocol (FTP) was born early in the Net’s history;14 the electronic message protocol (SMTP) was born soon after. It was not long before a protocol to display directories in a graphical way (Gopher) was developed. And in 1991 the most famous of protocols—the hyper text transfer protocol (HTTP) and hyper text markup language (HTML)—gave birth to the World Wide Web. Each protocol spawned many applications. Since no one had a monopoly on the protocol, no one had a monopoly on its implementation. There were many FTP applications and many e-mail servers. There were even a large number of browsers.15 The protocols were open standards, gaining their blessing from standards bodies such as the Internet Engineering Task Force (IETF) and, later, the W3C. Once a protocol was specified, programmers could build programs that utilized it. Much of the software implementing these protocols was “open,” at least initially—that is, the source code for the software was available along with the object code.16 This openness was responsible for much of the early Net’s growth. Others could explore how a program was implemented and learn from that example how better to implement the protocol in the future. The World Wide Web is the best example of this point. Again, the code that makes a web page appear as it does is called the hyper text markup language, or HTML.17 With HTML, you can specify how a web page will appear and to what it will be linked. The original HTML was proposed in 1990 by the CERN researchers Tim Berners-Lee and Robert Cailliau.18 It was designed to make it easy to link documents at a research facility, but it quickly became obvious that documents on any machine on the Internet could be linked. Berners-Lee and Cailliau made both HTML and its companion HTTP freely available for anyone to take. And take them people did, at first slowly, but then at an extraordinary rate. People started building web pages and linking them to others. HTML became one of the fastest-growing computer languages in the history of computing. Why? One important reason was that HTML was always “open.” Even today, on most browsers in distribution, you can always reveal the “source” of a web page and see what makes it tick. The source remains open: You can download it, copy it, and improve it as you wish. Copyright law may protect the source code of a web page, but in reality it protects it very imperfectly. HTML became as popular as it did primarily because it was so easy to copy. Anyone, at any time, could look under the hood of an HTML document and learn how the author produced it. Openness—not property or contract but free code and access—created the boom that gave birth to the Internet that we now know. And it was this boom that then attracted the attention of commerce. With all this activity, commerce rightly reasoned, surely there was money to be made. Historically the commercial model for producing software has been different.19 Though the history began even as the open code movement continued, commercial software vendors were not about to produce “free” (what most call “open source”) software. Commercial vendors produced software that was closed—that traveled without its source and was protected against modification both by the law and by its own code. By the second half of the 1990s—marked most famously by Microsoft’s Windows 95, which came bundled Internet-savvy—commercial software vendors began producing “application space” code. This code was increasingly connected to the Net—it increasingly became code “on” the Internet. But for the most part, the code remained closed. That began to change, however, around the turn of the century. Especially in the context of peer-to-peer services, technologies emerged that were dominant and “open.” More importantly, the protocols these technologies depended upon were unregulated. Thus, for example, the protocol that the peer-to-peer client Grokster used to share content on the Internet is itself an open standard that anyone can use. Many commercial entities tried to use that standard, at least until the Supreme Court’s decision in Grokster. But even if that decision inspires every commercial entity to abandon the StreamCast network, noncommercial implementations of the protocol will still exist. The same mix between open and closed exists in both browsers and blogging software. Firefox is the more popular current implementation of the Mozilla technology—the technology that originally drove the Netscape browser. It competes with Microsoft’s Internet Explorer and a handful of other commercial browsers. Likewise, WordPress is an open-source blogging tool that competes with a handful of other proprietary blogging tools. This recent growth in open code builds upon a long tradition. Part of the motivation for that tradition is ideological, or values based. Richard Stallman is the inspiration here. In 1984, Stallman began the Free Software Foundation with the aim of fueling the growth of free software. A MacArthur Fellow who gave up his career to commit himself to the cause, Stallman has devoted the last twenty years of his life to free software. That work began with the GNU project, which sought to develop a free operating system. By 1991, the GNU project had just about everything it needed, except a kernel. That final challenge was taken up by an undergraduate at the University of Helsinki. That year, Linus Torvalds posted on the Internet the kernel of an operating system. He invited the world to extend and experiment with it. People took up the challenge, and slowly, through the early 1990s, marrying the GNU project with Torvald’s kernel, they built an operating system— GNU/Linux. By 1998, it had become apparent to all that GNU/Linux was going to be an important competitor to the Microsoft operating system. Microsoft may have imagined in 1995 that by 2000 there would be no other server operating system available except Windows NT, but when 2000 came around, there was GNU/Linux, presenting a serious threat to Microsoft in the server market. Now in 2007, Linux-based web servers continue to gain market share at the expense of Microsoft systems. GNU/Linux is amazing in many ways. It is amazing first because it is theoretically imperfect but practically superior. Linus Torvalds rejected what computer science told him was the ideal operating system design,20 and instead built an operating system that was designed for a single processor (an Intel 386) and not cross-platform-compatible. Its creative development, and the energy it inspired, slowly turned GNU/Linux into an extraordinarily powerful system. As of this writing, GNU/Linux has been ported to at least eighteen different computer architecture platforms—from the original Intel processors, to Apple’s PowerPC chip, to Sun SPARC chips, and mobile devices using ARM processors.21 Creative hackers have even ported Linux to squeeze onto Apple’s iPod and old Atari systems. Although initially designed to speak only one language, GNU/Linux has become the lingua franca of free software operating systems. What makes a system open is a commitment among its developers to keep its core code public—to keep the hood of the car unlocked. That commitment is not just a wish; Stallman encoded it in a license that sets the terms that control the future use of most free software. This is the Free Software Foundation’s General Public License (GPL), which requires that any code licensed with GPL (as GNU/Linux is) keep its source free. GNU/Linux was developed by an extraordinary collection of hackers worldwide only because its code was open for others to work on. Its code, in other words, sits in the commons.22 Anyone can take it and use it as she wishes. Anyone can take it and come to understand how it works. The code of GNU/Linux is like a research program whose results are always published for others to see. Everything is public; anyone, without having to seek the permission of anyone else, may join the project. This project has been wildly more successful than anyone ever imagined. In 1992, most would have said that it was impossible to build a free operating system from volunteers around the world. In 2002, no one could doubt it anymore. But if the impossible could become possible, then no doubt it could become impossible again. And certain trends in computing technology may create precisely this threat. For example, consider the way Active Server Pages (ASP) code works on the network. When you go to an ASP page on the Internet, the server runs a program—a script to give you access to a database, for example, or a program to generate new data you need. ASPs are increasingly popular ways to provide program functionality. You use it all the time when you are on the Internet. But the code that runs ASPs is not technically “distributed.” Thus, even if the code is produced using GPL’d code, there’s no GPL obligation to release it to anyone. Therefore, as more and more of the infrastructure of networked life becomes governed by ASP, less and less will be effectively set free by free license. “Trusted Computing” creates another threat to the open code ecology. Launched as a response to virus and security threats within a networked environment, the key technical feature of “trusted computing” is that the platform blocks programs that are not cryptographically signed or verified by the platform. For example, if you want to run a program on your computer, your computer would first verify that the program is certified by one of the authorities recognized by the computer operating system, and “incorporat[ing] hardware and software . . . security standards approved by the content providers themselves.”23 If it isn’t, the program wouldn’t run. In principle, of course, if the cost of certifying a program were tiny, this limitation might be unproblematic. But the fear is that this restriction will operate to effectively block open code projects. It is not easy for a certifying authority to actually know what a program does; that means certifying authorities won’t be keen to certify programs they can’t trust. And that in turn will effect a significant discrimination against open code.

zurück zu Code: Kommunikation und Kontrolle (Vorlesung Hrachovec, 2007/08)