Geschäftsfälle (BD2015)

Inhaltsverzeichnis

- 1 Paul Zikopoulos, Chris Eaton: Understanding Big Data: Analytics for Enterprise Class Hadoop and Streaming Data

- 2 Datenwerte

- 3 Arvind Sathi: Big Data Analytics: Disruptive Technologies for Changing the Game

- 4 Viktor Mayer-Schönberger, Kenneth Cukier: Big Data: A Revolution That Will Transform How We Live, Work, and Think

- 5 Rob Kitchin: Big Data, new epistemologies and paradigm shifts

Paul Zikopoulos, Chris Eaton: Understanding Big Data: Analytics for Enterprise Class Hadoop and Streaming Data

Big Data solutions are ideal for analyzing not only raw structured data, but semistructured and unstructured data from a wide variety of sources.

- Big Data solutions are ideal when all, or most, of the data needs to be analyzed versus a sample of the data; or a sampling of data isn’t nearly as effective as a larger set of data from which to derive analysis.

- Big Data solutions are ideal for iterative and exploratory analysis when business measures on data are not predetermined.

...

When it comes to solving information management challenges using Big Data technologies, we suggest you consider the following:

- Is the reciprocal of the traditional analysis paradigm appropriate for the business task at hand? Better yet, can you see a Big Data platform complementing what you currently have in place for analysis and achieving synergy with existing solutions for better business outcomes?

For example, typically, data bound for the analytic warehouse has to be cleansed, documented, and trusted before it’s neatly placed into a strict warehouse schema (and, of course, if it can’t fit into a traditional row and column format, it can’t even get to the warehouse in most cases). In contrast, a Big Data solution is not only going to leverage data not typically suitable for a traditional warehouse environment, and in massive amounts of volume, but it’s going to give up some of the formalities and “strictness” of the data. The benefit is that you can preserve the fidelity of data and gain access to mountains of information for exploration and discovery of business insights before running it through the due diligence that you’re accustomed to; the data that can be included as a participant of a cyclic system, enriching the models in the warehouse.

- Big Data is well suited for solving information challenges that don’t natively fit within a traditional relational database approach for

handling the problem at hand. It’s important that you understand that conventional database technologies are an important, and relevant, part of an overall analytic solution. In fact, they become even more vital when used in conjunction with your Big Data platform.

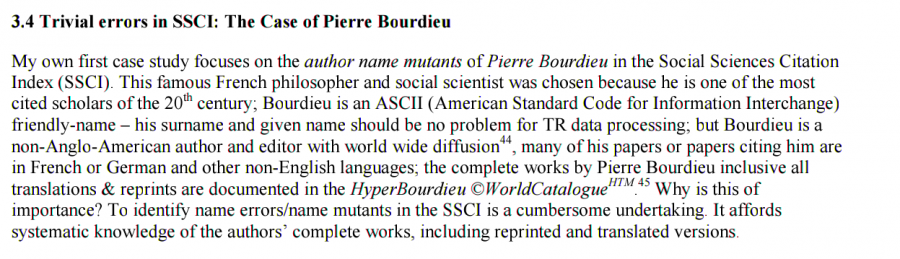

Dec 9 22:13:58 phaidon rsyslogd: -- MARK -- Dec 9 22:16:24 phaidon sshd[6851]: warning: /etc/hosts.deny, line 197: can't verify hostname: getaddrinfo(mail1.ceplt.tk): Name or service not known Dec 9 22:16:27 phaidon sshd[6859]: warning: /etc/hosts.deny, line 197: can't verify hostname: getaddrinfo(.): Name or service not known Dec 9 22:16:31 phaidon sshd[6859]: reverse mapping checking getaddrinfo for . [186.225.255.97] failed - POSSIBLE BREAK-IN ATTEMPT! Dec 9 22:16:31 phaidon sshd[6859]: Invalid user admin from 186.225.255.97 Dec 9 22:16:31 phaidon sshd[6864]: input_userauth_request: invalid user admin Dec 9 22:16:35 phaidon sshd[6851]: reverse mapping checking getaddrinfo for mail1.ceplt.tk [208.67.1.25] failed - POSSIBLE BREAK-IN ATTEMPT! Dec 9 22:16:35 phaidon sshd[6851]: User root from 208.67.1.25 not allowed because not listed in AllowUsers Dec 9 22:16:35 phaidon sshd[6855]: input_userauth_request: invalid user root Dec 9 22:18:20 phaidon named[2308]: success resolving 'ns.ptt.js.cn/A' (in 'ptt.js.cn'?) after reducing the advertised EDNS UDP packet size to 512 octets Dec 9 22:18:22 phaidon named[2308]: success resolving 'ns.jsinfo.net/AAAA' (in 'jsinfo.net'?) after disabling EDNS Dec 9 22:18:26 phaidon named[2308]: success resolving '207.42.186.222.in-addr.arpa/PTR' (in '186.222.in-addr.arpa'?) after disabling EDNS Dec 9 22:30:31 phaidon sudo: root : TTY=unknown ; PWD=/root ; USER=eprints ; COMMAND=/opt/eprints3/cgi/irstats.cgi eprints3 update_table Dec 9 22:30:57 phaidon sudo: root : TTY=unknown ; PWD=/root ; USER=eprints ; COMMAND=/opt/eprints3/cgi/irstats.cgi eprints3 update_metadata Dec 9 22:33:02 phaidon logrotate: ALERT exited abnormally with [1] Dec 9 22:33:02 phaidon logrotate: Can not scan /var/cache/awstats: No such file or directory Dec 9 22:33:02 phaidon logrotate: error: error running non-shared prerotate script for /var/log/apache2/awstats.log of '/var/log/apache2/awstats.log ' Dec 9 22:33:04 phaidon su: (to nobody) root on none Dec 9 22:33:04 phaidon su: (to nobody) root on none Dec 9 22:33:04 phaidon su: (to nobody) root on none Dec 9 22:34:50 phaidon sshd[7992]: Invalid user admin from 109.161.240.218 Dec 9 22:39:54 phaidon su: (to cyrus) root on none Dec 9 22:39:54 phaidon ctl_mboxlist[8201]: DBERROR: reading /var/lib/imap/db/skipstamp, assuming the worst: No such file or directory Dec 9 22:39:54 phaidon ctl_mboxlist[8201]: skiplist: checkpointed /var/lib/imap/mailboxes.db (0 records, 144 bytes) in 0 seconds Dec 9 22:53:50 phaidon sshd[8596]: Invalid user admin from 195.191.242.5 Dec 9 22:59:52 phaidon sshd[8755]: refused connect from ::ffff:189.3.2.116 (189.3.2.116)

Statistiken Philosophische Audiothek

IT for IT Log Analytics

Log analytics is a common use case for an inaugural Big Data project. We like to refer to all those logs and trace data that are generated by the operation of your IT solutions as data exhaust. Enterprises have lots of data exhaust, and it’s pretty much a pollutant if it’s just left around for a couple of hours or days in case of emergency and simply purged. Why? Because we believe data exhaust has concentrated value, and IT shops need to figure out a way to store and extract value from it. Some of the value derived from data exhaust is obvious and has been transformed into value-added click-stream data that records every gesture, click, and movement made on a web site. Some data exhaust value isn’t so obvious. At the DB2 development labs in Toronto (Ontario, Canada) engineers derive terrific value by using BigInsights for performance optimization analysis. For example, consider a large, clustered transaction-based database system and try to preemptively find out where small optimizations in correlated activities across separate servers might be possible. There are needles (some performance optimizations) within a haystack (mountains of stack trace logs across many servers). Trying to find correlation across tens of gigabytes of per core stack trace information is indeed a daunting task, but a Big Data platform made it possible to identify previously unreported areas for performance optimization tuning. Quite simply, IT departments need logs at their disposal, and today they just can’t store enough logs and analyze them in a cost-efficient manner, so logs are typically kept for emergencies and discarded as soon as possible. Another reason why IT departments keep large amounts of data in logs is to look for rare problems. It is often the case that the most common problems are known and easy to deal with, but the problem that happens “once in a while” is typically more difficult to diagnose and prevent from occurring again.

We think that IT yearns (or should yearn) for log longevity. We also think that both business and IT know there is value in these logs, and that’s why we often see lines of business duplicating these logs and ending up with scattershot retention and nonstandard (or duplicative) analytic systems that vary greatly by team. Not only is this ultimately expensive (more aggregate data needs to be stored— often in expensive systems), but since only slices of the data are available, it is nearly impossible to determine holistic trends and issues that span such a limited retention time period and views of the information.

Today this log history can be retained, but in most cases, only for several days or weeks at a time, because it is simply too much data for conventional systems to store, and that, of course, makes it impossible to determine trends and issues that span such a limited retention time period. But there are more reasons why log analysis is a Big Data problem aside from its voluminous nature. The nature of these logs is semistructured and raw, so they aren’t always suited for traditional database processing. In addition, log formats are constantly changing due to hardware and software upgrades, so they can’t be tied to strict inflexible analysis paradigms. Finally, not only do you need to perform analysis on the longevity of the logs to determine trends and patterns and to pinpoint failures, but you need to ensure the analysis is done on all the data.

...

The Fraud Detection Pattern

Fraud detection comes up a lot in the financial services vertical, but if you look around, you’ll find it in any sort of claims- or transaction-based environment (online auctions, insurance claims, underwriting entities, and so on). Pretty much anywhere some sort of financial transaction is involved presents a potential for misuse and the ubiquitous specter of fraud. If you leverage a Big Data platform, you have the opportunity to do more than you’ve ever done before to identify it or, better yet, stop it.

Several challenges in the fraud detection pattern are directly attributable to solely utilizing conventional technologies. The most common, and recurring, theme you will see across all Big Data patterns is limits on what can be stored as well as available compute resources to process your intentions. Without BigData technologies, these factors limit what can be modeled. Less data equals constrained modeling. What’s more, highly dynamic environments commonly have cyclical fraud patterns that come and go in hours, days, or weeks. If the data used to identify or bolster new fraud detection models isn’t available with low latency, by the time you discover these new patterns, it’s too late and some damage has already been done.

Traditionally, in fraud cases, samples and models are used to identify customers that characterize a certain kind of profile. The problem with this approach (and this is a trend that you’re going to see in a lot of these use cases) is that although it works, you’re profiling a segment and not the granularity at an individual transaction or person level. Quite simply, making a forecast based on a segment is good, but making a decision based upon the actual particulars of an individual transaction is obviously better. To do this, you need to work up a larger set of data than is conventionally possible in the traditional approach. In our customer experiences, we estimate that only 20 percent (or maybe less) of the available information that could be useful for fraud modeling is actually being used.

...

Social Media

Although basic insights into social media can tell you what people are saying and how sentiment is trending, they can’t answer what is ultimately a more important question: “Why are people saying what they are saying and behaving in the way they are behaving?” Answering this type of question requires enriching the social media feeds with additional and differently shaped information that’s likely residing in other enterprise systems. Simply put, linking behavior, and the driver of that behavior, requires relating social media analytics back to your traditional data repositories, whether they are SAP, DB2, Teradata, Oracle, or something else. You have to look beyond just the data; you have to look at the interaction of what people are doing with their behaviors, current financial trends, actual transactions that you’re seeing internally, and so on. Sales, promotions, loyalty programs, the merchandising mix, competitor actions, and even variables such as the weather can all be drivers for what consumers feel and how opinions are formed. Getting to the core of why your customers are behaving a certain way requires merging information types in a dynamic and cost-effective way, especially during the initial exploration phases of the project.

Does it work? Here’s a real-world case: A client introduced a different kind of environmentally friendly packaging for one of its staple brands. Customer sentiment was somewhat negative to the new packaging, and some months later, after tracking customer feedback and comments, the company discovered an unnerving amount of discontent around the change and moved to a different kind of eco-friendly package. It works, and we credit this progressive company for leveraging Big Data technologies to discover, understand, and react to the sentiment.

We’ll hypothesize that if you don’t have some kind of micro-blog oriented customer sentiment pulse-taking going on at your company, you’re likely losing customers to another company that does. We think watching the world record Twitter tweets per second (Ttps) index is a telling indicator on the potential impact of customer sentiment. Super Bowl 2011 set a Twitter Ttps record in February 2011 with 4064 Ttps; it was surpassed by the announcement of bin Laden’s death at 5106 Ttps, followed by the devastating Japan earthquake at 6939 Ttps. This Twitter record fell to the sentiment expressed when Paraguay’s football penalty shootout win over Brazil in the Copa America quarterfinal peaked at 7166 Ttps, which could not beat yet another record set on the same day: a U.S. match win in the FIFA Women’s World Cup at 7196 Ttps. When we went to print with this book, the famous singer Beyonce’s Twitter announcement of her pregnancy peaked at 8868 Ttps and was the standing Ttps record. We think these records are very telling—not just because of the volume and velocity growth, but also because sentiment is being expressed for just about anything and everything, including your products and services. Truly, customer sentiment is everywhere; just ask Lady Gaga (@ladygaga) who is the most followed Tweeter in the world. What can we learn from this? First, everyone is able to express reaction and sentiment in seconds (often without thought or filters) for the world to see, and second, more and more people are expressing their thoughts or feelings about everything and anything.

Datenwerte

Make Use of Selling Your Personal Information

Citizenme: An App That Will Let You Sell Your Own Data

Akademischer Datengebrauch

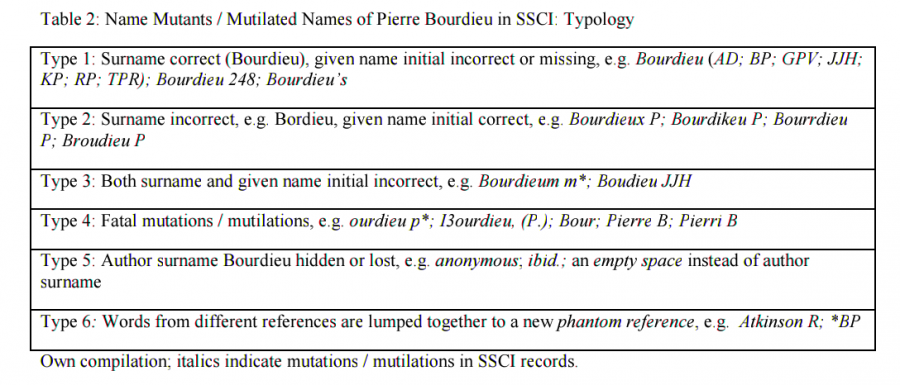

Terje Tüür-Fröhlich: Needless to say my proposal was turned down

Users beware: implications of database errors

Heinz Hauffe: Bibliometrische Verfahren zur Bewertung von Zeitschriften

Gerhard Fröhlich: Gegen-Evaluation: Der Impact-Faktor auf dem Prüfstand der Wissenschaftsforschung

Arvind Sathi: Big Data Analytics: Disruptive Technologies for Changing the Game

From a Big Data Analytics perspective, a “data bazaar” is the biggest enabler to create an external marketplace, where we collect, exchange, and sell customer information. We are seeing a new trend in the marketplace, in which customer experience from one industry is anonymized, packaged, and sold to other industries. Fortunately for us, Internet advertising came to our rescue in providing an incentive to customers through free services and across-the-board opt-ins.

Internet advertising is a remarkably complex field. With over $26 billion in 2010 revenue,9 the industry is feeding a fair amount of startup and initial public offering (IPO) activity. What is interesting is that this advertising money is enhancing customer experience. Take the case of Yelp, which lets consumers share their experiences regarding restaurants, shopping, nightlife, beauty spas, active life, coffee and tea, and others.10 Yelp obtains its revenues through advertising on its website; however, most of the traffic is from people who access Yelp to read customer experience posted by others. With all this traffic coming to the Internet, the questions that arise are how is this Internet usage experience captured and packaged and how are advertisements traded among advertisers and publishers.

Drivers for Big Data

Big Data Analytics is creating a new market, where customer data from one industry can be collected, categorized, anonymized, and repackaged for sale to others:

- Location—As we discussed earlier, location is increasingly available to suppliers. Assuming a product is consumed in conjunction with a mobile device, the location of the consumer becomes an important piece of information that may be available to the supplier.

- Cookies—Web browsers carry enormous information using web cookies. Some of this may be directly associated with touch points.

- Usage data—A number of data providers have started to collect, synthesize, categorize, and package information for reuse. This includes

credit-rating agencies that rate consumers, social networks with blogs published or “Like” clicked, and cable companies with audience information. Some of this data may be available only in summary form or anonymized for the protection of customer privacy.

Viktor Mayer-Schönberger, Kenneth Cukier: Big Data: A Revolution That Will Transform How We Live, Work, and Think

Hodder & Stoughton, 2013

To appreciate the degree to which an information revolution is already under way, consider trends from across the spectrum of society. Our digital universe is constantly expanding. Take astronomy. When the Sloan Digital Sky Survey began in 2000, its telescope in New Mexico collected more data in its first few weeks than had been amassed in the entire history of astronomy. By 2010 the survey’s archive teemed with a whopping 140 terabytes of information. But a successor, the Large Synoptic Survey Telescope in Chile, due to come on stream in 2016, will acquire that quantity of data every five days.

Such astronomical quantities are found closer to home as well. When scientists first decoded the human genome in 2003, it took them a decade of intensive work to sequence the three billion base pairs. Now, a decade later, a single facility can sequence that much DNA in a day. In finance, about seven billion shares change hands every day on U.S. equity markets, of which around two-thirds is traded by computer algorithms based on mathematical models that crunch mountains of data to predict gains while trying to reduce risk.

At its core, big data is about predictions. Though it is described as part of the branch of computer science called artificial intelligence, and more specifically, an area called machine learning, this characterization is misleading. Big data is not about trying to “teach” a computer to “think” like humans. Instead, it’s about applying math to huge quantities of data in order to infer probabilities: the likelihood that an email message is spam; that the typed letters “teh” are supposed to be “the”; that the trajectory and velocity of a person jaywalking mean he’ll make it across the street in time—the self-driving car need only slow slightly. The key is that these systems perform well because they are fed with lots of data on which to base their predictions. Moreover, the systems are built to improve themselves over time, by keeping a tab on what are the best signals and patterns to look for as more data is fed in.

In the future—and sooner than we may think—many aspects of our world will be augmented or replaced by computer systems that today are the sole purview of human judgment. Not just driving or matchmaking, but even more complex tasks. After all, Amazon can recommend the ideal book, Google can rank the most relevant website, Facebook knows our likes, and LinkedIn divines whom we know. The same technologies will be applied to diagnosing illnesses, recommending treatments, perhaps even identifying “criminals” before one actually commits a crime. Just as the Internet radically changed the world by adding communications to computers, so too will big data change fundamental aspects of life by giving it a quantitative dimension it never had before.

MORE

BIG DATA IS ALL ABOUT seeing and understanding the relations within and among pieces of information that, until very recently, we struggled to fully grasp. IBM’s big-data expert Jeff Jonas says you need to let the data “speak to you.” At one level this may sound trivial. Humans have looked to data to learn about the world for a long time, whether in the informal sense of the myriad observations we make every day or, mainly over the last couple of centuries, in the formal sense of quantified units that can be manipulated by powerful algorithms.

The digital age may have made it easier and faster to process data, to calculate millions of numbers in a heartbeat. But when we talk about data that speaks, we mean something more—and different. As noted in Chapter One, big data is about three major shifts of mindset that are interlinked and hence reinforce one another. The first is the ability to analyze vast amounts of data about a topic rather than be forced to settle for smaller sets. The second is a willingness to embrace data’s real-world messiness rather than privilege exactitude. The third is a growing respect for correlations rather than a continuing quest for elusive causality. This chapter looks at the first of these shifts: using all the data at hand instead of just a small portion of it.

Therein lay the tension: Use all the data, or just a little? Getting all the data about whatever is being measured is surely the most sensible course. It just isn’t always practical when the scale is vast. But how to choose a sample? Some argued that purposefully constructing a sample that was representative of the whole would be the most suitable way forward. But in 1934 Jerzy Neyman, a Polish statistician, forcefully showed that such an approach leads to huge errors. The key to avoid them is to aim for randomness in choosing whom to sample.

Statisticians have shown that sampling precision improves most dramatically with randomness, not with increased sample size. In fact, though it may sound surprising, a randomly chosen sample of 1,100 individual observations on a binary question (yes or no, with roughly equal odds) is remarkably representative of the whole population. In 19 out of 20 cases it is within a 3 percent margin of error, regardless of whether the total population size is a hundred thousand or a hundred million. Why this should be the case is complicated mathematically, but the short answer is that after a certain point early on, as the numbers get bigger and bigger, the marginal amount of new information we learn from each observation is less and less.

The fact that randomness trumped sample size was a startling insight. It paved the way for a new approach to gathering information. Data using random samples could be collected at low cost and yet extrapolated with high accuracy to the whole. As a result, governments could run small versions of the census using random samples every year, rather than just one every decade. And they did. The U.S. Census Bureau, for instance, conducts more than two hundred economic and demographic surveys every year based on sampling, in addition to the decennial census that tries to count everyone. Sampling was a solution to the problem of information overload in an earlier age, when the collection and analysis of data was very hard to do.

The applications of this new method quickly went beyond the public sector and censuses. In essence, random sampling reduces big-data problems to more manageable data problems. In business, it was used to ensure manufacturing quality—making improvements much easier and less costly. Comprehensive quality control originally required looking at every single product coming off the conveyor belt; now a random sample of tests for a batch of products would suffice. Likewise, the new method ushered in consumer surveys in retailing and snap polls in politics. It transformed a big part of what we used to call the humanities into the social sciences.

Random sampling has been a huge success and is the backbone of modern measurement at scale. But it is only a shortcut, a second-best alternative to collecting and analyzing the full dataset. It comes with a number of inherent weaknesses. Its accuracy depends on ensuring randomness when collecting the sample data, but achieving such randomness is tricky. Systematic biases in the way the data is collected can lead to the extrapolated results being very wrong.

Rob Kitchin: Big Data, new epistemologies and paradigm shifts

In contrast, Big Data is characterized by being generated continuously, seeking to be exhaustive and fine-grained in scope, and flexible and scalable in its production. Examples of the production of such data include: digital CCTV; the recording of retail purchases; digital devices that record and communicate the history of their own use (e.g. mobile phones); the logging of transactions and interactions across digital networks (e.g. email or online banking); clickstream data that record navigation through a website or app; measurements from sensors embedded into objects or environments; the scanning of machine-readable objects such as travel passes or barcodes; and social media postings (Kitchin, 2014). These are producing massive, dynamic flows of diverse, fine-grained, relational data. For example, in 2012 Wal-Mart was generating more than 2.5 petabytes of data relating to more than 1 million customer transactions every hour (Open Data Center Alliance, 2012) and Facebook reported that it was processing 2.5 billion pieces of content (links, comments, etc.), 2.7 billion ‘Like’ actions and 300 million photo uploads per day (Constine, 2012).

Traditionally, data analysis techniques have been designed to extract insights from scarce, static, clean and poorly relational data sets, scientifically sampled and adhering to strict assumptions (such as independence, stationarity, and normality), and generated and analysed with a specific question in mind (Miller, 2010). The challenge of analysing Big Data is coping with abundance, exhaustivity and variety, timeliness and dynamism, messiness and uncertainty, high relationality, and the fact that much of what is generated has no specific question in mind or is a by-product of another activity. Such a challenge was until recently too complex and difficult to implement, but has become possible due to high-powered computation and new analytical techniques. These new techniques are rooted in research concerning artificial intelligence and expert systems that have sought to produce machine learning that can computationally and automatically mine and detect patterns and build predictive models and optimize outcomes (Han et al., 2011; Hastie et al., 2009). Moreover, since different models have their strengths and weaknesses, and it is often diffcult to prejudge which type of model and its various versions will perform best on any given data set, an ensemble approach can be employed to build multiple solutions (Seni and Elder, 2010). Here, literally hundreds of different algorithms can be applied to a dataset to determine the best or a composite model or explanation (Siegel, 2013), a radically different approach to that traditionally used wherein the analyst selects an appropriate method based on their knowledge of techniques and the data. In other words, Big Data analytics enables an entirely new epistemological approach for making sense of the world; rather than testing a theory by analysing relevant data, new data analytics seek to gain insights ‘born from the data’.

There is a powerful and attractive set of ideas at work in the empiricist epistemology that runs counter to the deductive approach that is hegemonic within modern science:

- Big Data can capture a whole domain and provide full resolution;

- there is no need for a priori theory, models or hypotheses;

- through the application of agnostic data analytics the data can speak for themselves free of human bias or framing, and any patterns and relationships within Big Data are inherently meaningful and truthful;

- meaning transcends context or domain-specific knowledge, thus can be interpreted by anyone who can decode a statistic or data visualization.

These work together to suggest that a new mode of science is being created, one in which the modus operandi is purely inductive in nature.

Whilst this empiricist epistemology is attractive, it is based on fallacious thinking with respect to the four ideas that underpin its formulation.

First,

though Big Data may seek to be exhaustive, capturing a whole domain and providing full resolution, it is both a representation and a sample, shaped by the technology and platform used, the data ontology employed and the regulatory environment, and it is subject to sampling bias (Crawford, 2013; Kitchin, 2013). Indeed, all data provide oligoptic views of the world: views from certain vantage points, using particular tools, rather than an all-seeing, infallible God’s eye view (Amin and Thrift, 2002; Haraway, 1991). As such, data are not simply natural and essential elements that are abstracted from the world in neutral and objective ways and can be accepted at face value; data are created within a complex assemblage that actively shapes its constitution (Ribes and Jackson, 2013).

Second,

Big Data does not arise from nowhere, free from the ‘the regulating force of philosophy’ (Berry, 2011: 8). Contra, systems are designed to capture certain kinds of data and the analytics and algorithms used are based on scientific reasoning and have been refined through scientific testing. As such, an inductive strategy of identifying patterns within data does not occur in a scientific vacuum and is discursively framed by previous findings, theories, and training; by speculation that is grounded in experience and knowledge (Leonelli, 2012). New analytics might present the illusion of automatically discovering insights without asking questions, but the algorithms used most certainly did arise and were tested scientifically for validity and veracity.

Third,

just as data are not generated free from theory, neither can they simply speak for themselves free of human bias or framing. As Gould (1981: 166) notes, ‘inanimate data can never speak for themselves, and we always bring to bear some conceptual framework, either intuitive and ill-formed, or tightly and formally structured, to the task of investigation, analysis, and interpretation’. Making sense of data is always framed – data are examined through a particular lens that influences how they are interpreted. Even if the process is automated, the algorithms used to process the data are imbued with particular values and contextualized within a particular scientific approach. Further, patterns found within a data set are not inherently meaningful. Correlations between variables within a data set can be random in nature and have no or little causal association, and interpreting them as such can produce serious ecological fallacies. This can be exacerbated in the case of Big Data as the empiricist position appears to promote the practice of data dredging – hunting for every association or model.

Fourth,

the idea that data can speak for themselves suggests that anyone with a reasonable understanding of statistics should be able to interpret them without context or domain-specific knowledge. This is a conceit voiced by some data and computer scientists and other scientists, such as physicists, all of whom have become active in practising social science and humanities research. For example, a number of physicists have turned their attention to cities, employing Big Data analytics to model social and spatial processes and to identify supposed laws that underpin their formation and functions (Bettencourt et al., 2007; Lehrer, 2010). These studies often wilfully ignore a couple of centuries of social science scholarship, including nearly a century of quantitative analysis and model building. The result is an analysis of cities that is reductionist, functionalist and ignores the effects of culture, politics, policy, governance and capital (reproducing the same kinds of limitations generated by the quantitative/positivist social sciences in the mid-20th century).

A similar set of concerns is shared by those in the sciences. Strasser (2012), for example, notes that within the biological sciences, bioinformaticians who have a very narrow and particular way of understanding biology are claiming ground once occupied by the clinician and the experimental and molecular biologist. These scientists are undoubtedly ignoring the observations of Porway (2013): Without subject matter experts available to articulate problems in advance, you get [poor] results . . . . Subject matter experts are doubly needed to assess the results of the work, especially when you’re dealing with sensitive data about human behavior. As data scientists, we are well equipped to explain the ‘what’ of data, but rarely should we touch the question of ‘why’ on matters we are not experts in.

Put simply, whilst data can be interpreted free of context and domain-specific expertise, such an epistemological interpretation is likely to be anaemic or unhelpful as it lacks embedding in wider debates and knowledge.